Description:

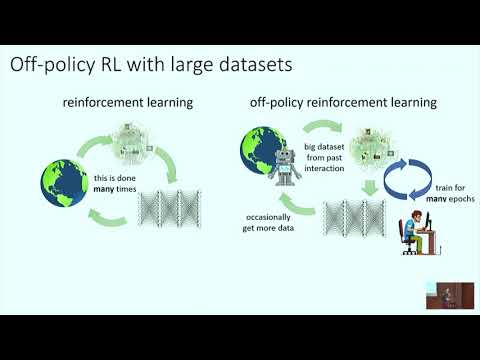

Explore deep reinforcement learning applications in real-world scenarios through this insightful lecture by Sergey Levine from the University of Berkeley. Delve into the challenges and solutions of off-policy reinforcement learning with large datasets, focusing on model-free and model-based approaches. Learn about QT-Opt, an off-policy Q-learning algorithm at scale, and its application in robotic grasping tasks. Discover how to address common issues in reinforcement learning, such as training on irrelevant data, and understand the potential of temporal difference models and Q-functions in learning implicit models. Gain valuable insights into optimizing over valid states and the application of model-based reinforcement learning for dexterous manipulation tasks.

Deep Reinforcement Learning in the Real World - Sergey Levine

Add to list

#Computer Science

#Machine Learning

#Reinforcement Learning

#Deep Learning

#Deep Reinforcement Learning

#Model Based Reinforcement Learning

0:00 / 0:00