Description:

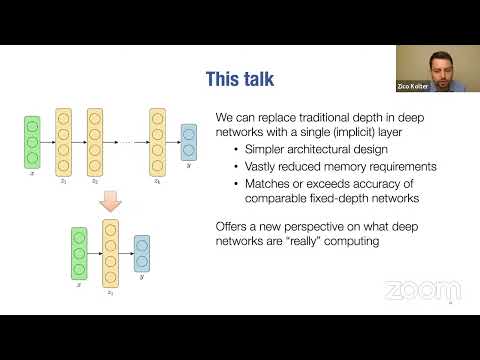

Explore deep equilibrium models (DEQs) and monotone equilibrium models (monDEQs) in this 46-minute lecture by Zico Kolter for the International Mathematical Union. Delve into the monDEQ framework, which guarantees fixed point uniqueness and enables efficient operator splitting methods. Learn how to bound Lipschitz constants of monDEQ models, produce generalization bounds for these "infinitely deep" networks, and characterize their robustness. Discover the connections between monDEQs and mean-field inference, and understand how these approaches can formulate Boltzmann-machine-like models with guaranteed convergence to globally optimal solutions. Examine practical applications in language modeling and image segmentation, and gain insights into the theoretical and algorithmic challenges of DEQs.

Deep Neural Networks via Monotone Operators

Add to list