Description:

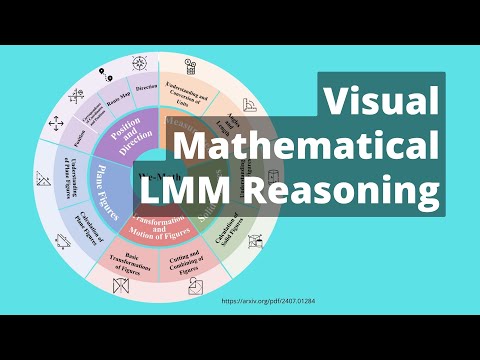

Watch a 21-minute research presentation exploring WE-MATH, a groundbreaking benchmark system for evaluating Large Multimodal Models (LMMs) in visual mathematical reasoning. Learn about the innovative four-dimensional metric system that assesses models' performance across Insufficient Knowledge, Inadequate Generalization, Complete Mastery, and Rote Memorization. Discover how this benchmark, featuring 6.5K visual math problems across 67 hierarchical knowledge concepts, reveals critical insights into LMMs' problem-solving capabilities and limitations. Explore the implementation of Knowledge Concept Augmentation (KCA) strategy and its impact on improving model performance, while understanding the challenges that remain in achieving human-like mathematical reasoning. Gain valuable insights into the correlation between problem complexity and model performance, particularly in scenarios requiring multiple knowledge concepts and integrated problem-solving approaches.

Visual Mathematical AI Reasoning - WE-MATH: Evaluating Human-like Mathematical Reasoning in Large Multimodal Models

Add to list

#Computer Science

#Artificial Intelligence

#Machine Learning

#Programming

#Software Development

#Software Testing

#Performance Testing

#Benchmarking