Description:

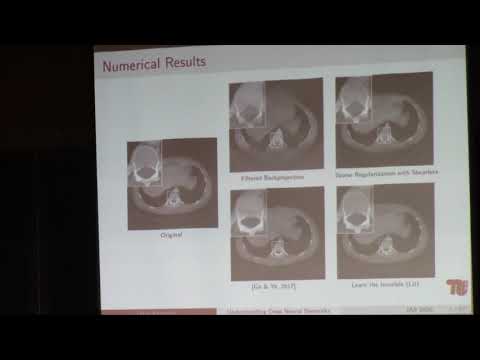

Explore a seminar on theoretical machine learning that delves into the understanding of deep neural networks, focusing on generalization and interpretability. Gain insights from Gitta Kutyniok of Technische Universität Berlin as she discusses the dawn of deep learning, its impact on mathematical problems, and numerical results. Examine graph convolutional neural networks, including two approaches to convolution on graphs and spectral graph convolution. Investigate spectral filtering using functional calculus and compare the repercussion of filters on different graphs. Analyze the transferability of functional calculus filters and rethink transferability concepts. Address fundamental questions concerning deep neural networks, exploring the general problem setting, relevance mapping, and rate-distortion viewpoint. Conclude with observations from an MNIST experiment, providing a comprehensive overview of current research in deep learning theory and applications.

Understanding Deep Neural Networks - From Generalization to Interpretability

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Deep Learning

#Deep Neural Networks

#Machine Learning

#Theoretical Machine Learning