Description:

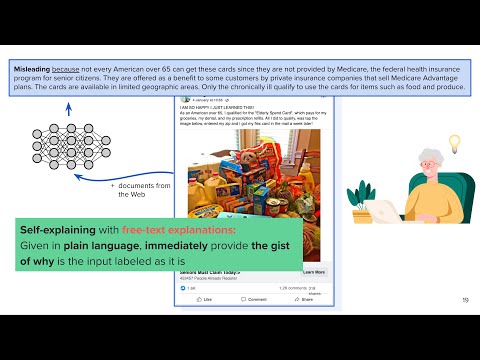

Learn about advanced concepts in AI uncertainty quantification and prompting techniques in this comprehensive lecture. Explore temperature scaling methods and Bayesian approaches to calibration before diving into free-text explanations and chain-of-thought prompting. Master in-context learning (ICL) principles and their reliable implementation, while understanding prompt-based fine-tuning strategies. Examine practical applications through case studies of FLAN-T5 and LLaMA Chat models. Gain insights into how these techniques improve AI model performance and reliability through detailed explanations and real-world examples.

Uncertainty, Prompting, and Chain-of-Thoughts in Large Language Models - Part 2

Add to list

#Computer Science

#Artificial Intelligence

#Natural Language Processing (NLP)

#Prompt Engineering

#Machine Learning

#LLM (Large Language Model)

#LLaMA (Large Language Model Meta AI)

#Mathematics

#Statistics & Probability

#Uncertainty Quantification

#Language Models

#In-context Learning

#Flan-T5