Description:

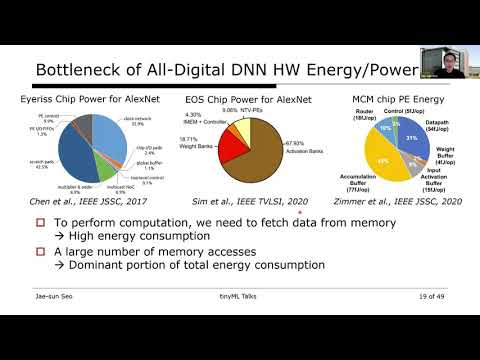

Explore SRAM-based in-memory computing for energy-efficient AI inference in this tinyML talk. Delve into recent silicon demonstrations, innovative memory bitcell circuits, peripheral circuits, and architectures designed to improve upon conventional row-by-row memory operations. Learn about a modeling framework for design parameter optimization and discover how these advancements address limitations in memory access and footprint for low-power AI processors. Gain insights into analog computation inside memory arrays, ADC optimization, programmable IMC accelerators, and noise-aware training and inference techniques. The talk also covers topics such as black-box adversarial input attacks and pruning of crossbar-based IMC hardware, providing a comprehensive overview of cutting-edge developments in energy-efficient AI inference.

TinyML Talks - SRAM Based In-Memory Computing for Energy-Efficient AI Inference

Add to list

#Computer Science

#Computer Architecture

#Hardware Acceleration

#Deep Learning

#Art & Design

#Visual Arts

#Architecture