Description:

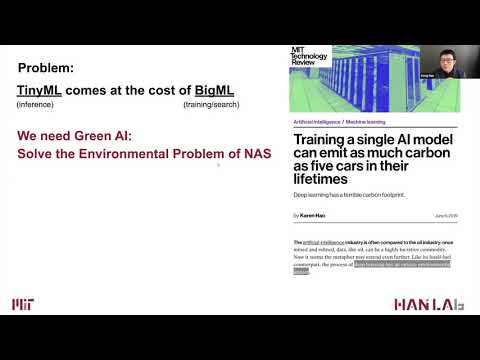

Explore a groundbreaking approach to efficient machine learning deployment across diverse hardware platforms in this tinyML Talks webcast. Learn about the Once-for-All (OFA) network, which decouples training and search to support various architectural settings. Discover the novel progressive shrinking algorithm, a generalized pruning method that reduces model size across multiple dimensions. Understand how OFA outperforms state-of-the-art NAS methods on edge devices, achieving significant improvements in ImageNet top1 accuracy and latency compared to MobileNetV3 and EfficientNet. Gain insights into OFA's success in the 4th Low Power Computer Vision Challenge and its potential to revolutionize efficient inference on diverse hardware platforms while reducing GPU hours and CO2 emissions.

Train One Network and Specialize It for Efficient Deployment

Add to list

#Computer Science

#Machine Learning

#AutoML

#Programming

#Cloud Computing

#Edge Computing

#Model Optimization