Description:

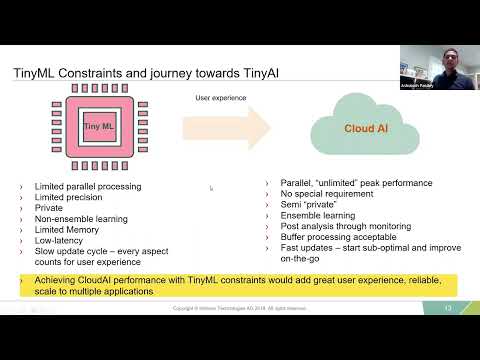

Explore techniques for building efficient and robust TinyML deployments in this 57-minute tinyML Talk. Delve into the challenges of edge deployment for deep learning applications, including privacy concerns, low power requirements, and robustness against out-of-distribution data. Learn about trade-offs between power and performance in supervised learning scenarios, and discover a dynamic fixed-point quantization scheme suitable for edge deployment with limited calibration data. Examine the compute resource trade-offs in quantization, such as memory and cycles. Gain insights into edge deployment architecture that utilizes deep learning methods to handle out-of-distribution data caused by sensor degradation and alien operating conditions. Topics covered include TinyML vs CloudAI, data considerations, transfer learning, keyword spotting, quantization techniques, architecture tweaking, and out-of-distribution detection.

Exploring Techniques to Build Efficient and Robust TinyML Deployments

Add to list