Description:

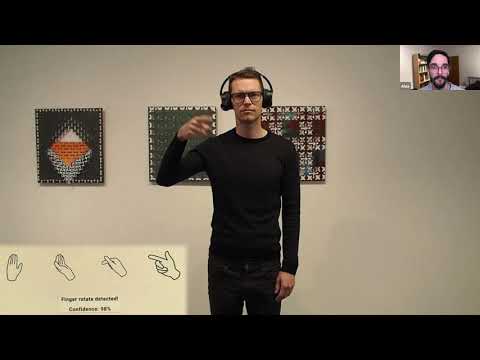

Learn how to develop advanced hand gesture controls using radar sensors and tinyML in this 33-minute tinyML Talks webcast. Explore a case study from Imagimob, pioneers in Edge AI, as they demonstrate the creation of gesture-controlled headphones showcased at CES 2020. Discover the process of developing Edge AI applications using Imagimob AI (SaaS), including data collection, preprocessing, and AI model creation. Gain insights into the challenges faced, future possibilities, and how this technology differs from other approaches. The presentation covers working proof of concepts, CES demos, and addresses audience questions, providing a comprehensive look at the intersection of radar technology and tinyML for innovative gesture control applications.

How to Build Advanced Hand-Gestures Using Radar and TinyML

Add to list