Description:

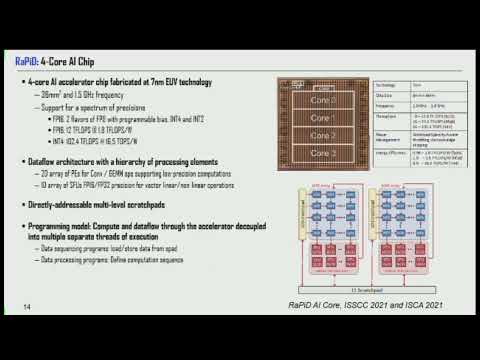

Explore a comprehensive conference talk from the tinyML Summit 2022 that delves into the three pillars of AI acceleration: algorithms, hardware, and software. Learn about IBM Research's holistic approach to designing specialized AI systems, guided by the evolution of AI workloads. Discover approximate computing techniques for low-precision DNN models, hardware methods for scalable computational arrays supporting various precisions, and software methodologies for efficient DNN mapping. Gain insights into the importance of hardware specialization and acceleration in meeting the computational demands of Deep Neural Networks. Examine topics such as FP8 training, dataflow architectures, rapid AI chip development, and compiler design for AI systems. Understand the significance of cross-layer design across the compute stack and how it contributes to the success of DNNs in performing complex AI tasks across multiple domains.

Mastering the Three Pillars of AI Acceleration: Algorithms, Hardware, and Software

Add to list

#Computer Science

#Artificial Intelligence

#Programming

#Programming Languages

#Compiler Design

#Deep Learning

#Deep Neural Networks