Description:

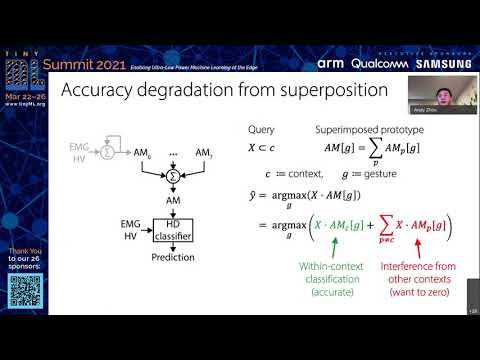

Explore cutting-edge research on memory-efficient, limb position-aware hand gesture recognition using hyperdimensional computing in this 20-minute talk from the tinyML Research Symposium 2021. Delve into the innovative approach presented by Andy Zhou, a PhD student from the University of California Berkeley, addressing reliability issues in electromyogram (EMG) pattern recognition caused by limb position changes. Learn about the dual-stage classification method and its implementation challenges in wearable devices with limited resources. Discover how sensor fusion of accelerometer and EMG signals using hyperdimensional computing models can emulate dual-stage classification efficiently. Examine two methods of encoding accelerometer features for retrieving position-specific parameters from multiple models stored in superposition. Gain insights into the validation process on a dataset of 13 gestures in 8 limb positions, resulting in a classification accuracy of up to 94.34%. Understand how this approach achieves significant improvements while maintaining a minimal memory footprint compared to traditional dual-stage classification architectures.

Read more

Memory-Efficient, Limb Position-Aware Hand Gesture Recognition Using Hyperdimensional Computing

Add to list

#Computer Science

#Machine Learning

#Engineering

#Electrical Engineering

#Embedded Systems

#Pattern Recognition

#Robotics

#Sensor Fusion