Description:

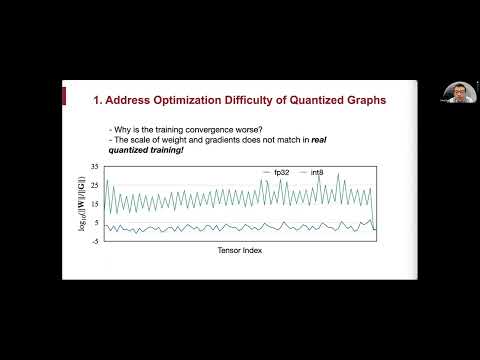

Explore on-device learning techniques for IoT devices with limited memory in this tinyML Forum talk by MIT EECS Assistant Professor Song Han. Discover an algorithm-system co-design framework enabling neural network fine-tuning with only 256KB of memory. Learn about quantization-aware scaling to stabilize quantized training and sparse update techniques to reduce memory footprint. Understand the implementation of Tiny Training Engine, a lightweight system that prunes backward computation graphs and offloads runtime auto-differentiation. Gain insights into practical solutions for on-device transfer learning in visual recognition tasks on microcontrollers, using significantly less memory than existing frameworks while matching cloud training accuracy. Delve into topics such as edge learning challenges, memory bottlenecks, parameter-efficient transfer learning, and deep gradient compression. See how this approach enables continuous adaptation and lifelong learning for tiny IoT devices, demonstrating their capability to perform both inference and on-device learning.

Read more

On-Device Learning Under 256KB Memory - Challenges and Solutions for IoT Devices

Add to list

#Computer Science

#Machine Learning

#TinyML

#Artificial Intelligence

#Neural Networks

#Engineering

#Electrical Engineering

#Microcontrollers

#Transfer Learning

#Quantization

#Programming

#Cloud Computing

#Edge Computing