Description:

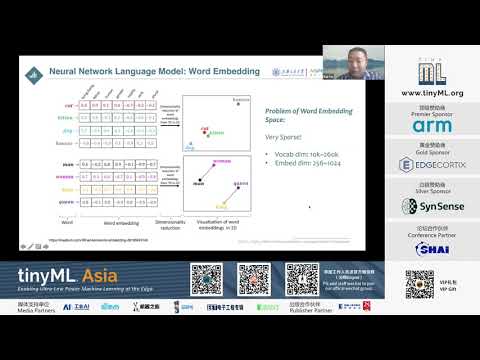

Explore a 32-minute conference talk from tinyML Asia 2020 focusing on structured quantization techniques for neural network language model compression. Delve into the challenges of large memory consumption in resource-constrained scenarios and discover how advanced structured quantization methods can achieve high compression ratios of 70-100 without compromising performance. Learn about various compression approaches, including pruning, fixed-point quantization, product quantization, and binarization. Examine the impact on speech recognition performance and compare results with full precision models. Gain insights into the application of these techniques to word embeddings and neural network architectures in the context of natural language processing and speech recognition.

Structured Quantization for Neural Network Language Model Compression

Add to list

#Computer Science

#Machine Learning

#Language Models

#Artificial Intelligence

#Speech Recognition

#Word Embeddings