Description:

Save Big on Coursera Plus. 7,000+ courses at $160 off. Limited Time Only!

Grab it

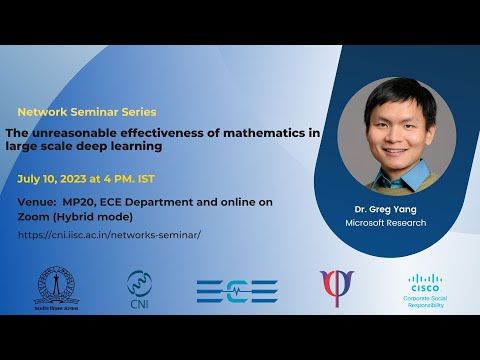

Explore the groundbreaking mathematical theory behind infinite-width neural networks in this research lecture from Microsoft Research's Greg Yang, presented at the Centre for Networked Intelligence, IISc. Discover muTransfer, a revolutionary technology enabling efficient tuning of massive neural networks like GPT-3's 6.7 billion parameter model using only 7% of its pretraining compute resources. Learn about the Optimal Scaling Thesis and its crucial connection between infinite-size limits and practical large model design. Delve into key mathematical concepts including geometrical intuition, infinite slopes, pure mathematical arguments, and dynamical economy theorems that shape the future of AI development. Yang, a Harvard graduate and winner of prestigious mathematics awards including the Hoopes prize, presents compelling research questions whose answers could revolutionize artificial intelligence development.

The Unreasonable Effectiveness of Mathematics in Large Scale Deep Learning - From Theory to Practice

Add to list

#Mathematics

#Computer Science

#Deep Learning

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#LLM (Large Language Model)

#GPT-3

#Machine Learning

#Transfer Learning

#Model Tuning