Description:

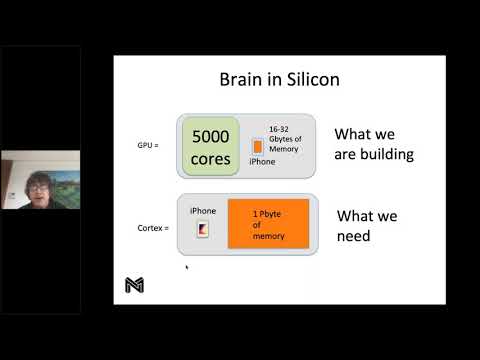

Discover how to process complex deep learning models at scale without specialized AI hardware in this 32-minute conference talk. Explore the Neural Magic Inference Engine, which challenges conventional wisdom about high-throughput computing by utilizing commodity CPUs instead of GPUs. Learn how CPUs' sophisticated cache memory hierarchies can address the limitations of GPUs, allowing for exceptional model performance without sacrificing accuracy. Gain insights into running deep neural networks with GPU-class performance on commodity CPUs, offering deployment flexibility and cost-effectiveness for data science teams. Witness a live demonstration of the Neural Magic Demo and understand how this software-based approach may revolutionize machine learning inference in real-world applications.

The Software GPU - Making Inference Scale in the Real World

Add to list

#Computer Science

#Deep Learning

#Programming

#Software Development

#Artificial Intelligence

#Neural Networks

#Computer Vision

#Image Recognition