Description:

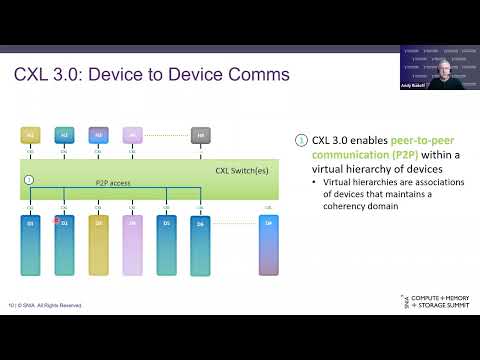

Watch a 22-minute conference presentation from the SNIA Compute+Memory+Storage Summit exploring the expanded capabilities and optimizations in CXL 3.0 technology. Dive deep into the latest features of Compute Express Link (CXL) 3.0 specification, including its doubled transfer rate of 64GT/s, enhanced fabric capabilities, improved memory sharing and pooling mechanisms, and advanced coherency features. Learn how CXL technology supports high-performance computational workloads in AI, Machine Learning, Analytics, and Cloud Infrastructure through heterogeneous processing and memory systems. Understand the practical implementations of memory pooling for optimizing server performance and discover how memory sharing enables machine clusters to tackle large-scale problems through shared memory constructs. Intel's Andy Rudoff guides you through detailed examples of memory pool configuration, HDM decoder programming, memory addition, and shared memory implementation while demonstrating how these features enhance data center efficiency and resource utilization.

Read more

CXL 3.0 - Expanded Capabilities for Memory Pooling and Resource Optimization

Add to list

#Business

#Marketing

#Digital Marketing

#Conversion Rate Optimization

#CXL

#Computer Science

#Computer Architecture

#Memory Management

#High Performance Computing