Description:

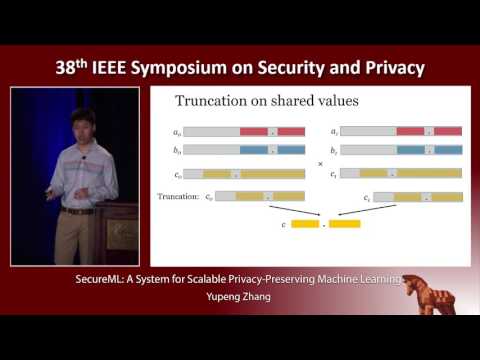

Explore a groundbreaking system for scalable privacy-preserving machine learning in this IEEE conference talk. Delve into new and efficient protocols for privacy-preserving linear regression, logistic regression, and neural network training using stochastic gradient descent. Discover innovative techniques for secure arithmetic operations on shared decimal numbers and MPC-friendly alternatives to nonlinear functions. Learn how this system, implemented in C++, outperforms state-of-the-art implementations for privacy-preserving linear and logistic regressions, scaling to millions of data samples with thousands of features. Gain insights into the first privacy-preserving system for training neural networks, addressing the critical balance between data utility and privacy concerns in modern machine learning applications.

SecureML - A System for Scalable Privacy-Preserving Machine Learning

Add to list

#Computer Science

#Machine Learning

#Privacy-Preserving Machine Learning

#Artificial Intelligence

#Neural Networks

#Mathematics

#Statistics & Probability

#Linear Regression

#Logistic Regression

#Stochastic Gradient Descent