Description:

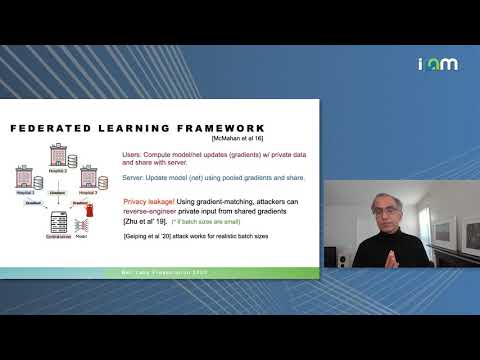

Explore the challenges and solutions for enabling deep learning on private data without compromising privacy in this 23-minute conference talk by Sanjeev Arora from Princeton University. Delve into the concept of Federated Learning and the need for secure data sharing among multiple parties. Learn about InstaHide and TextHide, innovative methods for "encrypting" images and text to enhance data security. Examine the limitations of current Federated Learning frameworks and understand the potential vulnerabilities exposed by recent attacks. Discover how these encryption techniques, inspired by the MixUp data augmentation technique, aim to provide enhanced security in various applications. Analyze the Carlini et al. 2020 attack on InstaHide, which combines combinatorial algorithms and deep learning, and evaluate its implications for data privacy. Gain insights into the ongoing challenges and advancements in protecting sensitive data while enabling collaborative deep learning across multiple parties.

Read more

How to Allow Deep Learning on Your Data Without Revealing Your Data

Add to list

#Computer Science

#Machine Learning

#Federated Learning

#Deep Learning

#Cryptography

#Algorithms

#Combinatorial Algorithms

#Information Security (InfoSec)

#Cybersecurity

#Privacy

#Differential Privacy