Description:

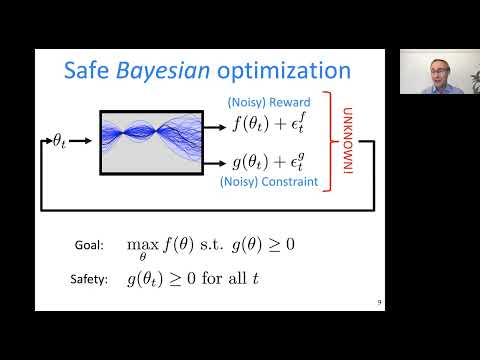

Explore safe and efficient reinforcement learning techniques in this one-hour seminar from the Machine Learning Advances and Applications series. Delve into the challenges of applying RL beyond simulated environments, focusing on safety constraints and tuning real-world systems like the Swiss Free Electron Laser. Learn about safe Bayesian optimization, Gaussian Process Inference, and confidence intervals for certifying safety. Discover methods for safe learning in dynamical systems, including planning with confidence bounds and forwards-propagating uncertain, nonlinear dynamics. Examine scaling up efficient optimistic exploration in deep model-based RL, with illustrations on inverted pendulum and Mujoco Half-Cheetah environments. Investigate PAC-Bayesian Meta Learning for choosing priors and its applications in Bayesian optimization and sequential decision making. Gain insights into safe and efficient exploration techniques applicable to real-world reinforcement learning scenarios.

Safe and Efficient Exploration in Reinforcement Learning

Add to list