Description:

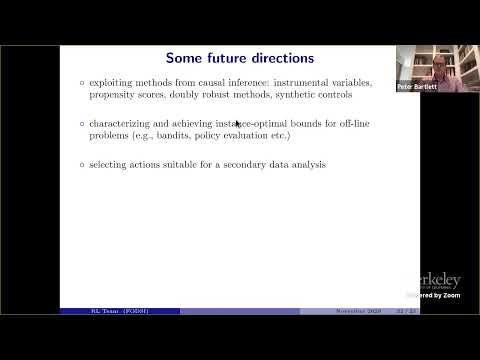

Explore reinforcement learning in this 31-minute lecture by Martin Wainwright from UC Berkeley, presented at the Foundations of Data Science Institute Kickoff Workshop. Gain a bird's-eye view of RL and its application in personal health. Delve into exploiting structure in RL, including Q-learning with low rank structure and comparing model-free versus model-based methods. Examine the performance of LSTD versus model-based methods in linear state space models with quadratic reward functions. Investigate exploration/exploitation beyond bandits, including Q-learning with UCB and Monte Carlo Tree Search. Consider the concept of instance-optimality in RL, particularly in policy evaluation. Finally, explore RL in offline settings and its connections to causal inference, touching on instrumental variables, propensity scores, doubly robust methods, and synthetic controls.

Reinforcement Learning

Add to list

#Computer Science

#Machine Learning

#Reinforcement Learning

#Data Science

#Mathematics

#Statistics & Probability

#Causal Inference

#Q-learning

#Offline Reinforcement Learning