Description:

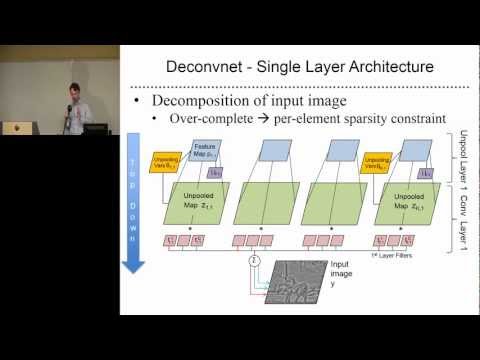

Explore a comprehensive guest lecture on regularization techniques for large neural networks delivered by Dr. Rob Fergus at the University of Central Florida. Delve into topics such as big neural nets, over-fitting, dropout and dropconnect methods, stochastic pooling, and deconvolutional networks. Learn about the theoretical analysis of these techniques, their limitations, and practical applications through experiments on datasets like MNIST, Street View House Numbers, and Caltech 101. Gain insights into convergence rates, the effects of network size and training set variations, and the link between deconvolutional networks and parts-and-structure models. Enhance your understanding of advanced deep learning concepts and their impact on computer vision tasks.

Regularization of Big Neural Networks

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Deep Learning

#Computer Vision

#Machine Learning

#Overfitting