Description:

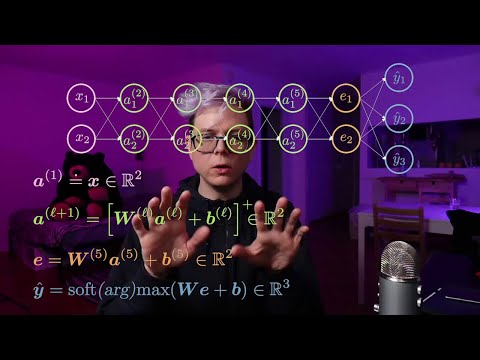

Explore recurrent neural networks, including vanilla and gated (LSTM) architectures, in this comprehensive lecture. Dive into various sequence processing techniques such as vector-to-sequence, sequence-to-vector, and sequence-to-sequence models. Learn about backpropagation through time, language modeling, and the challenges of vanishing and exploding gradients. Discover the Long Short-Term Memory (LSTM) architecture and its gating mechanism. Gain hands-on experience with a practical demonstration using Jupyter Notebook and PyTorch for sequence classification. Understand how to summarize research papers effectively and grasp the importance of higher hidden dimensions in neural networks.

Recurrent Neural Networks, Vanilla and Gated - LSTM

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Recurrent Neural Networks (RNN)

#Long short-term memory (LSTM)

#Deep Learning

#PyTorch

#Data Science

#Jupyter Notebooks

#Backpropagation