Description:

Save Big on Coursera Plus. 7,000+ courses at $160 off. Limited Time Only!

Grab it

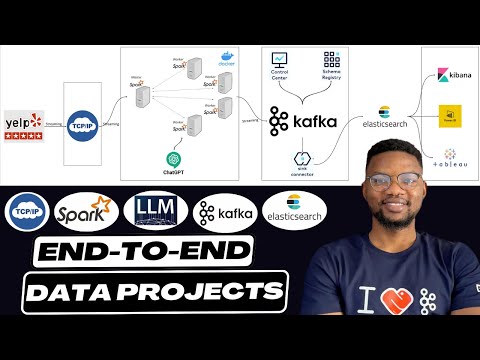

Build a real-time data streaming pipeline processing 7 million records using TCP/IP Socket, Apache Spark, OpenAI Large Language Model (LLM), Kafka, and Elasticsearch. Learn to configure TCP/IP for data transmission, stream data with Apache Spark, perform real-time sentiment analysis using OpenAI LLM (ChatGPT), set up Kafka for data ingestion and distribution, and utilize Elasticsearch for efficient indexing and search. Follow along to create a Spark Master-worker architecture with Docker, set up TCP IP Socket Source Stream, configure Apache Spark Stream, establish a Kafka Cluster on Confluent Cloud, integrate real-time sentiment analysis, deploy Elasticsearch on Elastic Cloud, and perform real-time data indexing. Gain hands-on experience in prompt engineering and test the complete pipeline to see the results of this comprehensive data engineering project.

Realtime Socket Streaming with Apache Spark - End-to-End Data Engineering Project

Add to list

#Data Science

#Big Data

#Apache Spark

#Computer Science

#DevOps

#Docker

#Artificial Intelligence

#Natural Language Processing (NLP)

#Sentiment Analysis

#Elasticsearch

#Computer Networking

#TCP/IP

#Data Engineering

#Data Streaming

#OpenAI