Description:

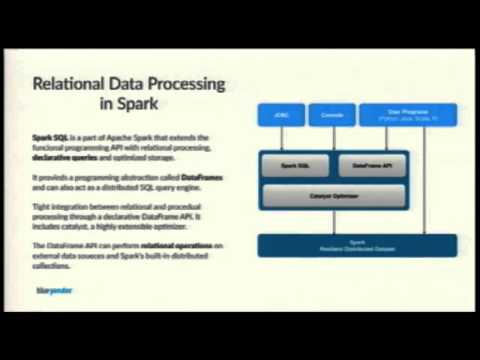

Explore PySpark for large-scale data processing in Python using Apache Spark in this 24-minute EuroPython 2015 conference talk. Gain an overview of Resilient Distributed Datasets (RDDs) and the DataFrame API, understanding how PySpark exposes Spark's programming model to Python. Learn about RDDs as immutable, partitioned collections of objects, and how transformations and actions work within the directed acyclic graph (DAG) execution model. Discover the DataFrame API, introduced in Spark 1.3, which simplifies operations on large datasets and supports various data sources. Delve into topics such as cluster computing, fault-tolerant abstractions, and in-memory computations across large clusters. Access additional resources on Spark architecture, analytics, and cluster computing to further enhance your understanding of this powerful data processing tool.

PySpark - Data Processing in Python on Top of Apache Spark

Add to list

#Conference Talks

#EuroPython

#Programming

#Programming Languages

#Python

#Data Science

#Big Data

#Apache Spark

#PySpark

#Data Processing