Description:

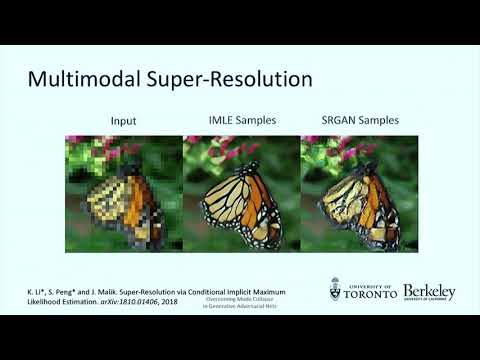

Explore the challenges and solutions in deep learning with this lecture from the Workshop on Theory of Deep Learning. Delve into the curse of dimensionality and mode collapse in generative adversarial networks (GANs) as presented by Ke Li from the University of California, Berkeley. Gain insights into problems faced by GANs, understand the connection to maximum likelihood estimation, and learn about F-Measure. Discover applications in multimodal super-resolution and image synthesis from scene layouts. Examine unconditional image synthesis using Implicit Maximum Likelihood Estimation (IMLE) on GLO embeddings. Investigate methods for finding nearest neighbors and understand the limitations of existing space partitioning algorithms. Compare closeness in location versus rank, and analyze space efficiency on datasets like CIFAR-100 and MNIST. Enhance your understanding of deep learning theory and its practical implications in this comprehensive talk.

Overcoming the Curse of Dimensionality and Mode Collapse - Ke Li

Add to list