Description:

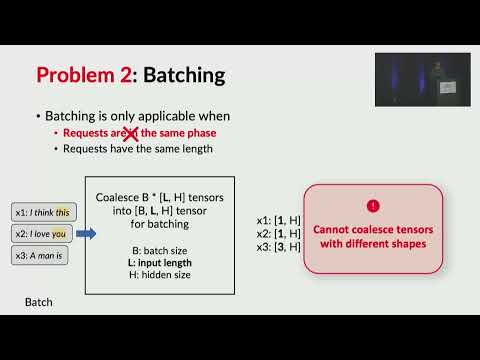

Explore a conference talk on Orca, a distributed serving system designed for Transformer-based generative models. Delve into the challenges of serving large-scale language models like GPT-3 and discover innovative solutions such as iteration-level scheduling and selective batching. Learn how these techniques significantly improve latency and throughput compared to existing systems. Gain insights into the architecture and scheduling mechanisms of Orca, which enable efficient processing of multi-iteration workloads for autoregressive token generation. Understand the importance of system support for serving cutting-edge generative AI models and how Orca addresses the limitations of current inference serving systems.

Orca - A Distributed Serving System for Transformer-Based Generative Models

Add to list

#Conference Talks

#OSDI (Operating Systems Design and Implementation)

#Computer Science

#Distributed Systems

#Operating Systems

#Scheduling Algorithms