Description:

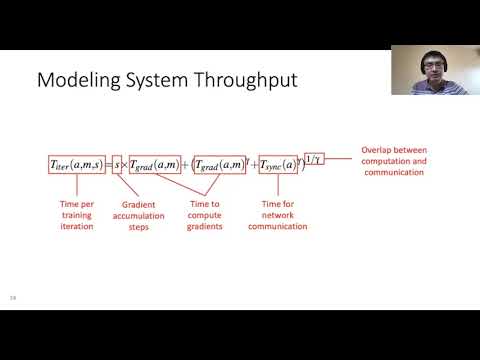

Explore a cutting-edge approach to deep learning cluster scheduling in this 14-minute conference talk from OSDI '21. Dive into Pollux, a co-adaptive cluster scheduler that optimizes goodput in deep learning environments. Learn how this innovative system simultaneously considers per-job and cluster-wide factors to improve resource allocation and utilization. Discover the novel goodput metric that combines system throughput with statistical efficiency, and understand how Pollux dynamically reassigns resources to enhance overall cluster performance. Gain insights into the system's ability to reduce average job completion times, promote fairness, and potentially lower costs in cloud environments. Examine the background of distributed deep learning, the impact of batch size on system throughput and statistical efficiency, and the key components of Pollux's cluster scheduler. Delve into the evaluation results and broader implications of this groundbreaking approach to deep learning cluster management.

Read more

Pollux - Co-adaptive Cluster Scheduling for Goodput-Optimized Deep Learning

Add to list

#Conference Talks

#OSDI (Operating Systems Design and Implementation)

#Computer Science

#Deep Learning

#Distributed Computing