Description:

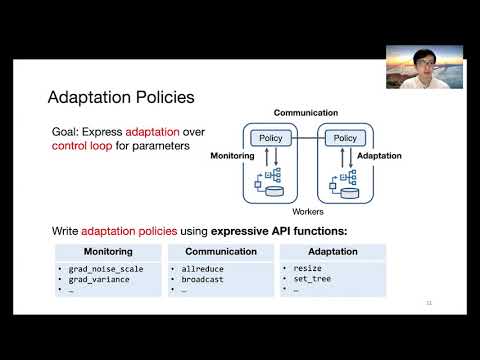

Explore the innovative KungFu distributed machine learning library for TensorFlow, designed to enable adaptive training in this OSDI '20 conference talk. Dive into the challenges of configuring numerous parameters in distributed ML systems and discover how KungFu addresses these issues through high-level Adaptation Policies (APs). Learn about the library's ability to dynamically adjust hyper-parameters and system parameters during training based on real-time monitored metrics. Understand the implementation of monitoring and control operators embedded in the dataflow graph, and the efficient asynchronous collective communication layer that ensures concurrency and consistency. Gain insights into the effectiveness of KungFu's adaptive approach, its mechanisms for distributed parameter adaptation, and the potential impact on improving the efficiency and performance of distributed machine learning training.

KungFu - Making Training in Distributed Machine Learning Adaptive

Add to list

#Conference Talks

#OSDI (Operating Systems Design and Implementation)

#Computer Science

#Machine Learning

#Distributed Machine Learning