Description:

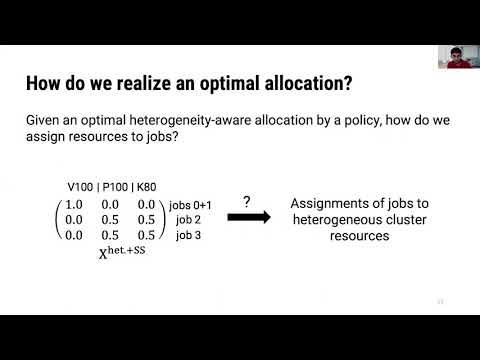

Explore a 20-minute conference talk from OSDI '20 that delves into Gavel, a novel heterogeneity-aware scheduler for deep learning workloads. Learn how Gavel addresses the challenges of heterogeneous performance across specialized accelerators and diverse scheduling objectives in cluster management. Discover the concept of effective throughput and how it's used to transform existing scheduling policies into heterogeneity-aware versions. Understand Gavel's round-based scheduling mechanism and its ability to optimize resource allocation in heterogeneous clusters. Examine the performance improvements Gavel offers, including higher input load sustainability and significant enhancements in makespan and average job completion time compared to heterogeneity-agnostic policies.

Heterogeneity-Aware Cluster Scheduling Policies for Deep Learning Workloads

Add to list

#Conference Talks

#OSDI (Operating Systems Design and Implementation)

#Mathematics

#Optimization Problems