Description:

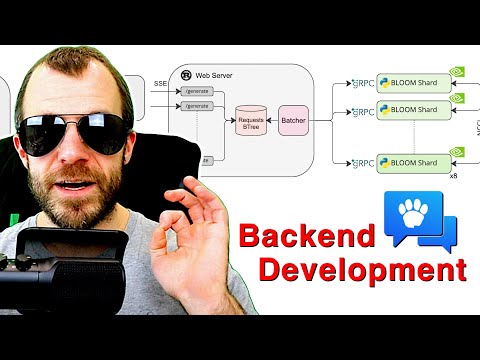

Dive into a hands-on coding session focused on building streaming inference into the Hugging Face text generation server. Explore various technologies including CUDA, Python, Rust, gRPC, WebSockets, and server-sent events. Follow along as the development process unfolds, covering the integration of Open Assistant with the Hugging Face infrastructure. Gain insights into MLOps practices and learn how to implement advanced features in AI-powered text generation systems. Access the original Hugging Face text generation inference repository and the Open Assistant repository for reference during the coding session. Discover free MLOps courses to further enhance your skills in machine learning operations.

Open Assistant Inference Backend Development

Add to list

#Computer Science

#Machine Learning

#Hugging Face

#Artificial Intelligence

#Programming

#Programming Languages

#Python

#Software Development

#CUDA

#Rust

#Cloud Computing

#Microservices

#gRPC

#Web Development

#Backend Development