Description:

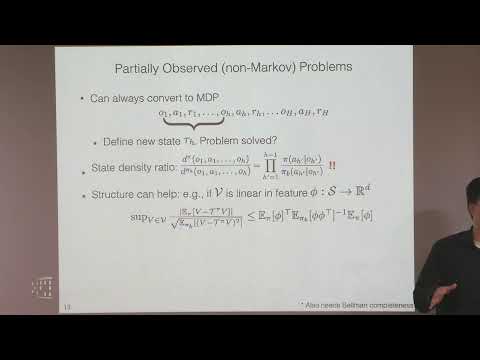

Explore a lecture on off-policy evaluation in non-Markov environments, focusing on the challenges of coverage in partially observable Markov decision processes (POMDPs). Delve into the novel framework of future-dependent value functions and learn about belief coverage and outcome coverage assumptions tailored to POMDP structures. Discover how these concepts enable the first polynomial sample complexity guarantee for off-policy evaluation in POMDPs, addressing the limitations of traditional Markov-based approaches. Gain insights into the practical implications for real-world applications of reinforcement learning, including RLHF in large language models.

On the Curses of Future and History in Off-policy Evaluation in Non-Markov Environments

Add to list