Description:

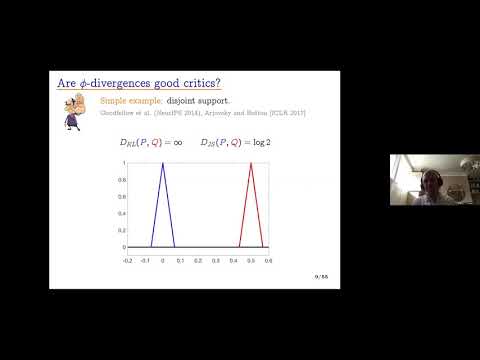

Explore the critic function of implicit generative models in this comprehensive seminar on Theoretical Machine Learning. Delve into divergence measures, variational forms, and topological properties as Arthur Gretton from University College London discusses generalized energy-based models and their applications. Examine the advantages and disadvantages of various approaches, including neural net divergence and generalized likelihood. Gain insights into smoothness properties, multimodality, and the challenges of jumping between modes in generative models. Understand the risks of memorization and the importance of realistic sampling in machine learning applications.

On the Critic Function of Implicit Generative Models - Arthur Gretton

Add to list

#Computer Science

#Machine Learning

#Theoretical Machine Learning

#Mathematics

#Statistics & Probability

#Sampling

#Energy-Based Models