Description:

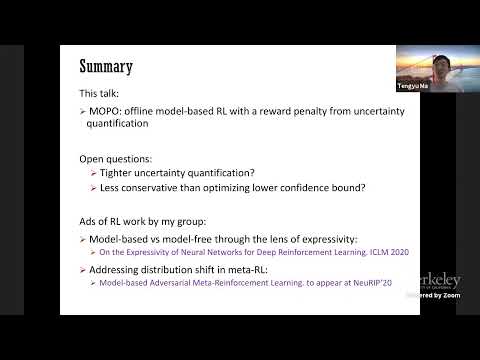

Explore a deep reinforcement learning presentation on Model-Based Offline Policy Optimization (MOPO) delivered by Tengyu Ma from Stanford University at the Simons Institute. Delve into topics such as distributional domain shift, answer identification, and improved sketch techniques as the speaker discusses innovative approaches to offline reinforcement learning. Gain insights into the challenges and solutions in developing effective policies from pre-collected datasets without direct interaction with the environment.

MOPO - Model-Based Offline Policy Optimization

Add to list