Description:

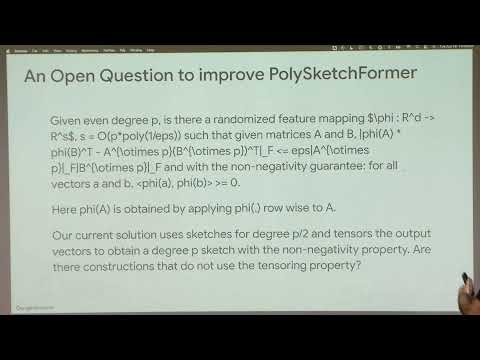

Explore a comprehensive lecture on improving efficiency in large machine learning models, focusing on data efficiency and faster Transformers. Delve into algorithmic challenges and potential solutions, ranging from theoretical concepts to practical applications. Examine data and model efficiency problems through subset selection, including sequential attention for feature selection and sparsification, as well as sensitivity sampling techniques for enhanced model quality and efficiency. Investigate the intrinsic quadratic complexity of attention models and token generation, learning about HyperAttention for developing linear-time attention algorithms under specific conditions. Discover PolySketchFormer, a method to achieve sub-quadratic attention through sketching of polynomial functions. Finally, address token generation complexity using clustering techniques, gaining insights into cutting-edge research in machine learning efficiency for large-scale models.

ML Efficiency for Large Models - From Data Efficiency to Faster Transformers

Add to list