Description:

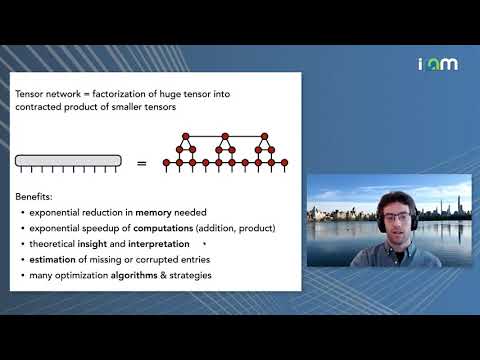

Explore tensor networks for machine learning applications in this 31-minute conference talk by Miles Stoudenmire from the Flatiron Institute. Delve into the power and flexibility of tensor networks as factorizations of high-order tensors, offering exponential gains in memory and computing time. Discover how these networks define a class of model functions with benefits similar to kernel methods and neural networks. Examine optimization algorithms, theoretical underpinnings, and opportunities for matching model architectures to data classes. Learn about exciting recent applications and future research prospects in the field. Cover topics including quantization models, tensor train notation, quantum physics connections, infinite matrix product states, projected entangled pair states, mutual information in image data, local update algorithms, and potential downsides of tensor network approaches.

Tensor Networks for Machine Learning and Applications

Add to list

#Computer Science

#Quantum Computing

#Tensor Networks

#Data Science

#Machine Learning

#Science

#Physics

#Quantum Mechanics

#Quantum Physics

#Kernel Methods

#Algorithms

#Optimization Algorithms