Description:

Explore the intricacies of deep learning performance and loss landscapes in this 46-minute conference talk. Delve into supervised deep learning concepts, including network architecture and dataset setup. Examine learning dynamics as a descent in the loss landscape, drawing parallels with granular matter jamming. Analyze theoretical phase diagrams and empirical tests using MNIST parity. Investigate landscape curvature, flat directions, and the potential for overfitting. Consider ensemble averages and how fluctuations impact error rates. Discover scaling arguments and explore infinitely-wide networks, including initialization and learning processes. Gain insights into the Neural Tangent Kernel and finite N asymptotics. Enhance your understanding of deep learning theory and its connections to statistical physics.

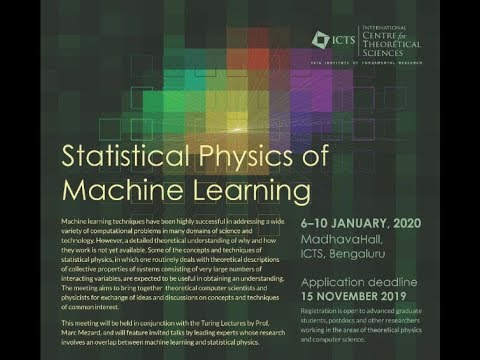

Loss Landscape and Performance in Deep Learning by Stefano Spigler

Add to list

#Computer Science

#Deep Learning

#Machine Learning

#Supervised Learning

#Computer Networking

#Network Architecture

#Science

#Materials Science

#Phase Diagrams

#Physics

#Statistical Physics