Description:

Save Big on Coursera Plus. 7,000+ courses at $160 off. Limited Time Only!

Grab it

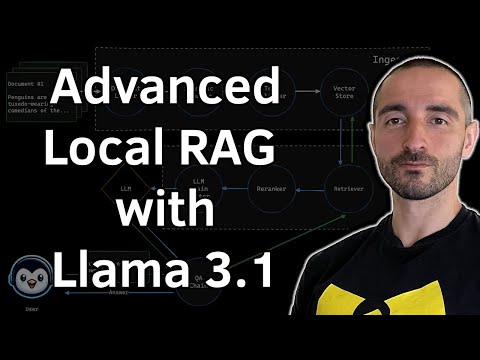

Discover how to build a local Retrieval-Augmented Generation (RAG) system for efficient document processing using Large Language Models (LLMs) in this comprehensive tutorial video. Learn to extract high-quality text from PDFs, split and format documents for optimal LLM performance, create vector stores with Qdrant, implement advanced retrieval techniques, and integrate local and remote LLMs. Follow along to develop a private chat application for your documents using LangChain and Streamlit, covering everything from project structure and UI design to document ingestion, retrieval methods, and deployment on Streamlit Cloud.

Local RAG with Llama 3.1 for PDFs - Private Chat with Documents using LangChain and Streamlit

Add to list

#Computer Science

#Artificial Intelligence

#Natural Language Processing (NLP)

#LangChain

#Programming

#Programming Languages

#Python

#Streamlit

#LLM (Large Language Model)

#LLaMA (Large Language Model Meta AI)

#Machine Learning

#Retrieval Augmented Generation (RAG)

#Databases

#Vector Databases

#Qdrant