Description:

Save Big on Coursera Plus. 7,000+ courses at $160 off. Limited Time Only!

Grab it

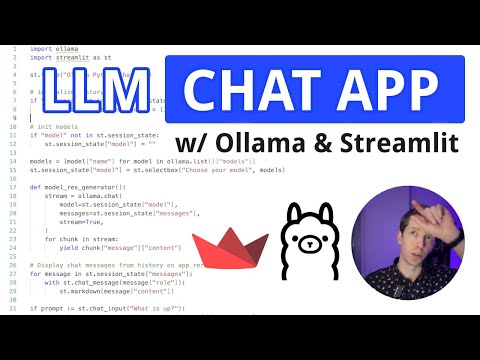

Learn how to build a chat application using Python, Ollama-py, and Streamlit in this 22-minute tutorial. Explore the Ollama Python library's key methods, including list(), show(), and chat(). Set up a Python environment and dive into Streamlit for creating the user interface. Follow along as the instructor guides you through constructing the chat app step-by-step, covering user input, message history, Ollama responses, model selection, and streaming responses. Gain insights into Python generators and their application in the project. By the end, you'll have created a functional LLM chat app with a user-friendly interface.

Building a Chat App with Ollama-py and Streamlit in Python

Add to list

#Computer Science

#Artificial Intelligence

#Ollama

#Programming

#Web Development

#Programming Languages

#Python

#Streamlit

#Natural Language Processing (NLP)

#LLM (Large Language Model)

#Web Design

#User Experience Design

#User Interface Design