Description:

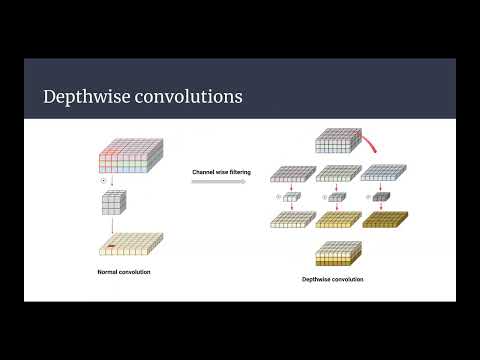

Explore the evolution of vision architectures and modernization of convolutional neural networks in this 33-minute lecture from the University of Central Florida. Delve into the hierarchy of SWIN versus CNNs, macro design changes in ResNet, and improvements like inverted bottlenecks and larger kernel sizes. Examine micro designs, including activation function replacements and normalization layer adjustments. Learn about the final ConvNext block, network evaluation techniques, and compare machine performance across different architectures.

Computer Vision Architecture Evolution: ConvNets to Transformers - Lecture 21

Add to list

#Computer Science

#Artificial Intelligence

#Computer Vision

#Machine Learning

#Activation Functions

#Deep Learning

#ResNet