Description:

Explore the intricacies of interpolation in machine learning through this comprehensive seminar on Theoretical Machine Learning. Delve into the complex topic of "Interpolation in learning: steps towards understanding when overparameterization is harmless, when it helps, and when it causes harm" presented by Anant Sahai from the University of California, Berkeley. Gain insights into basic principles, double descent phenomena, interpretation regime, and ill climate talk. Examine lower bounds, visualizations, and the paradigm attic concept. Investigate aliasing and aliases, develop intuition through matrix interpretations, and understand minimum 2 norms. Discover the reasons behind these concepts and explore relevant examples throughout this 1 hour and 23 minutes long presentation from the Institute for Advanced Study.

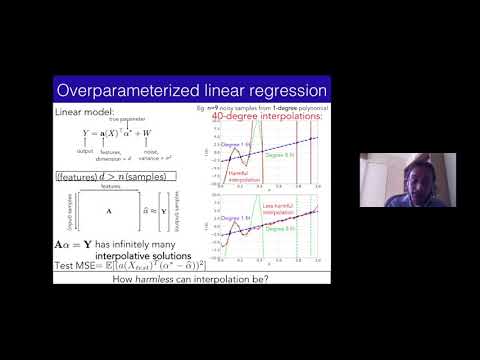

Interpolation in Learning - Steps Towards Understanding When Overparameterization Is Harmless, When It Helps, and When It Causes Harm

Add to list