Description:

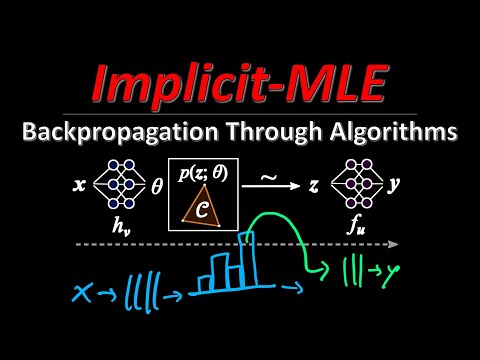

Explore a comprehensive video explanation of the paper "Implicit MLE: Backpropagating Through Discrete Exponential Family Distributions". Delve into the challenges of incorporating discrete probability distributions and combinatorial optimization problems with neural networks. Learn about the Implicit Maximum Likelihood Estimation (I-MLE) framework for end-to-end learning of models combining discrete exponential family distributions and differentiable neural components. Discover how I-MLE enables backpropagation through discrete algorithms, allowing combinatorial optimizers to be part of a network's forward propagation. Follow along as the video breaks down key concepts, including the straight-through estimator, encoding discrete problems as inner products, and approximating marginals via perturb-and-MAP. Gain insights into the paper's contributions, methodology, and practical applications through detailed explanations and visual aids.

Implicit MLE- Backpropagating Through Discrete Exponential Family Distributions

Add to list

#Computer Science

#Deep Learning

#Artificial Intelligence

#Neural Networks

#Backpropagation

#Deep Networks