Description:

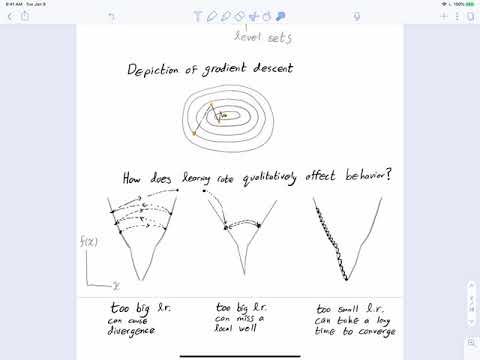

Explore gradient descent and stochastic gradient descent in deep neural networks through this 57-minute lecture. Delve into the effects of varying learning rates, examining the consequences of rates that are too high or too low. Analyze convergence rates for both gradient descent and stochastic gradient descent in the context of convex functions. Access accompanying notes for a comprehensive understanding of the topic. Part of Northeastern University's CS 7150 Summer 2020 Deep Learning course, this lecture covers introduction, gradient descent convergence, recovery theorem, proof and interpretation, gradient descent challenges, stochastic gradient descent, step sizes and learning rates, and associated challenges.

Gradient Descent and Stochastic Gradient Descent

Add to list

#Computer Science

#Machine Learning

#Gradient Descent

#Algorithms and Data Structures

#Mathematics

#Convex Optimization

#Convex Functions

#Algorithms

#Optimization Algorithms

#Deep Learning

#Deep Neural Networks

#Stochastic Gradient Descent