Description:

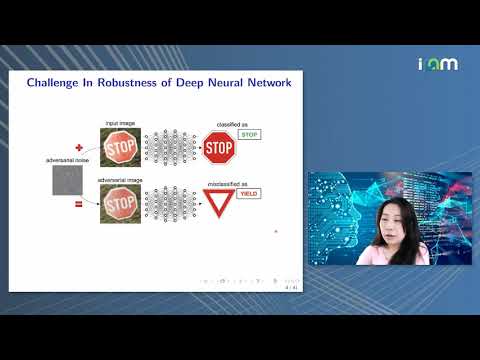

Explore a comprehensive lecture on leveraging tensor representations to understand, interpret, and design neural network models. Delve into the challenges of modern deep neural networks and learn how spectral methods using tensor decompositions can provide provable performance guarantees. Discover techniques for designing deep neural network architectures that ensure interpretability, expressive power, generalization, and robustness before training begins. Examine the use of spectral methods to create "desirable" deep model functions and guarantee optimal outcomes post-training. Investigate compression techniques, CP layers, and low-rankedness concepts. Analyze generalization error bounds and performance evaluations. Gain insights into interpreting transformers through tensor diagrams, exploring single and multi-hat self-attention mechanisms. Conclude with an overview of improved expressive power and tensor representation for robust learning, providing a comprehensive understanding of advanced neural network design and analysis techniques.

Read more

Understanding, Interpreting and Designing Neural Network Models Through Tensor Representations

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Deep Learning

#Algorithms

#Computational Complexity

#Mathematics

#Numerical Analysis

#Spectral Methods