Description:

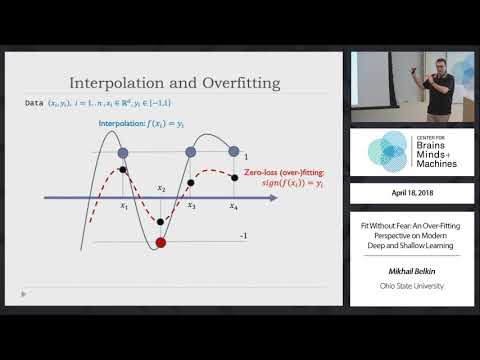

Explore the intriguing world of over-parametrization in modern supervised machine learning through this 55-minute lecture by Mikhail Belkin from Ohio State University. Delve into the paradox of deep networks with millions of parameters that interpolate training data yet perform excellently on test sets. Discover how classical kernel methods exhibit similar properties to deep learning and offer competitive alternatives when scaled for big data. Examine the effectiveness of stochastic gradient descent in driving training errors to zero in the interpolated regime. Gain insights into the challenges of understanding deep learning and the importance of developing a fundamental grasp of "shallow" kernel classifiers in over-fitted settings. Explore the perspective that much of modern learning's success can be understood through the lens of over-parametrization and interpolation, and consider the crucial question of why classifiers in this "modern" interpolated setting generalize so well to unseen data.

Read more

Fit Without Fear - An Over-Fitting Perspective on Modern Deep and Shallow Learning

Add to list

#Computer Science

#Machine Learning

#Kernel Methods

#Deep Learning

#Supervised Learning

#Mathematics

#Interpolation

#Overfitting

#Stochastic Gradient Descent