Description:

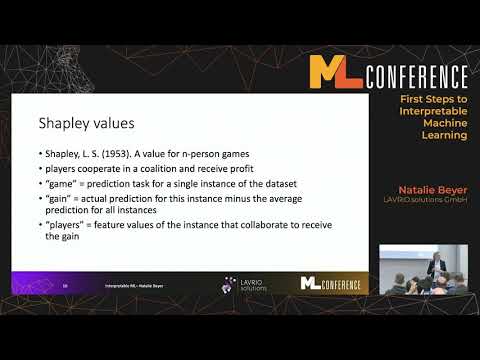

Explore a real-world data science project focused on interpretable machine learning in this 21-minute conference talk from MLCon. Learn how to use packages like SHAP to interpret predictions and visualize which features influence algorithm outcomes. Discover the effectiveness of this approach for clients seeking interpretable machine learning solutions. Follow along as the speaker demonstrates the process using a Kickstarter dataset, employing techniques such as CatBoost and feature importance analysis. Gain insights into automated checks, model comparisons, and practical applications of interpretable machine learning in consulting scenarios.

First Steps to Interpretable Machine Learning

Add to list

#Conference Talks

#MLCon

#Data Science

#Computer Science

#Machine Learning

#Interpretable Machine Learning

#SHAP