Description:

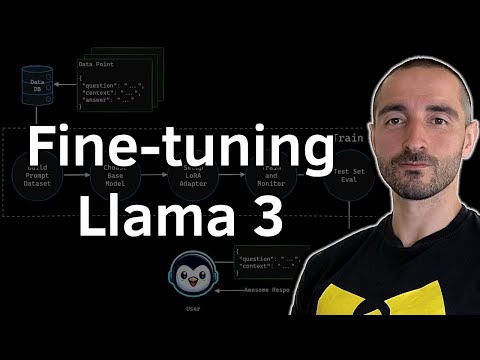

Learn how to fine-tune Llama 3 on a custom dataset for a RAG Q&A use case using a single GPU in this comprehensive 33-minute tutorial. Explore the benefits of fine-tuning, understand the process overview, and dive into practical steps including dataset preparation, model loading, custom dataset creation, and LoRA setup. Follow along with Google Colab setup, establish a baseline, train the model, and evaluate its performance against the base model. Gain insights into pushing the fine-tuned model to the HuggingFace hub and discover how even smaller models can outperform larger ones when properly fine-tuned for specific tasks.

Fine-Tuning Llama 3 on a Custom Dataset for RAG Q&A - Training LLM on a Single GPU

Add to list

#Computer Science

#Machine Learning

#Fine-Tuning

#Deep Learning

#Stable Diffusion

#LoRA (Low-Rank Adaptation)

#High Performance Computing

#Parallel Computing

#GPU Computing

#Retrieval Augmented Generation (RAG)