Description:

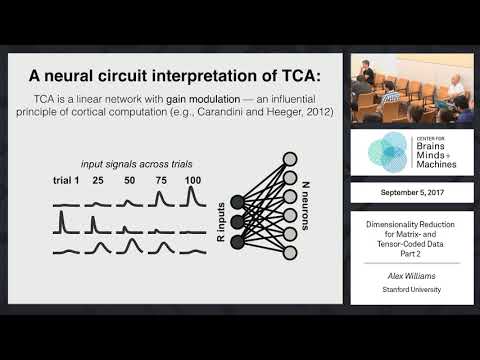

Explore dimensionality reduction techniques for matrix- and tensor-coded data in this comprehensive lecture by Alex Williams from Stanford University. Delve into matrix and tensor factorizations, including PCA, non-negative matrix factorization (NMF), independent components analysis (ICA), and canonical polyadic (CP) tensor decomposition. Learn how these methods compress large data tables and higher-dimensional arrays into more manageable representations, crucial for extracting scientific insights. Discover recent theoretical concepts and foundations, with a focus on CP tensor decomposition for higher-order data arrays. Examine practical applications in neuroscience, including the analysis of neural data across multiple timescales, sensory discrimination task learning, and prefrontal cortex encoding during maze navigation. Understand how tensor decomposition methods outperform PCA in recovering network parameters and modeling trial-to-trial variability. Gain insights into the Kruskal theorem and its implications for tensor decomposition identifiability.

Read more

Dimensionality Reduction for Matrix- and Tensor-Coded Data

Add to list