Description:

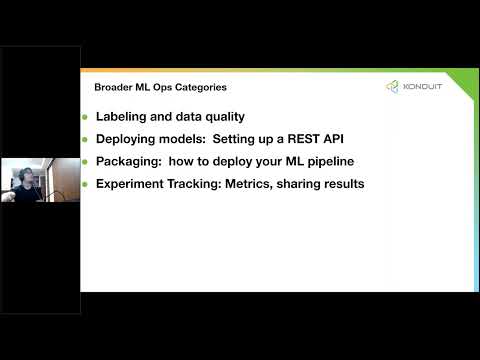

Explore a comprehensive 45-minute conference talk surveying various techniques for optimizing deep learning pipelines. Dive into advanced optimization methods like quantization, model distillation, and efficient math library selection, while examining their trade-offs in deployment scenarios. Gain insights into performance optimization focusing on latency and examples per second, tailored for software engineers, data scientists, and developers working with large-scale machine learning models. Cover crucial aspects of ML pipeline deployment, including data transformation, ETL processes, model storage formats, and emerging deep learning compilers. Learn about strategies to reduce model size, improve memory efficiency, and enhance computational speed through techniques such as pruning, batch norm folding, and knowledge distillation.

Deploying Optimized Deep Learning Pipelines

Add to list

#Computer Science

#Deep Learning

#Artificial Intelligence

#Computer Vision

#Machine Learning

#Quantization

#Programming

#Web Development

#REST APIs

#Experiment Tracking