Description:

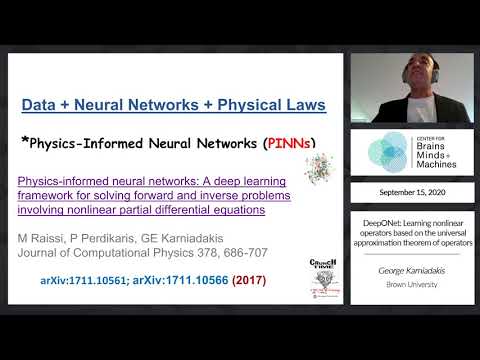

Explore a comprehensive lecture on DeepOnet, a novel neural network architecture designed to learn nonlinear operators based on the universal approximation theorem. Delve into the theoretical foundations, practical applications, and unique features of DeepOnet as presented by George Karniadakis from Brown University. Discover how this innovative approach leverages the power of neural networks to approximate complex continuous operators and systems with high accuracy. Examine various examples, including explicit operators like integrals and fractional Laplacians, as well as implicit operators representing deterministic and stochastic differential equations. Investigate the network's structure, consisting of branch and trunk networks, and its ability to encode discrete input function spaces and output function domains. Analyze the impact of different input function space formulations on generalization error and explore potential applications in fields such as fluid mechanics and brain aneurysm modeling. Gain insights into advanced concepts like autonomy, hidden fluid mechanics, and multiphysics simulations, while considering future research directions and potential improvements to the DeepOnet architecture.

Read more

DeepOnet - Learning Nonlinear Operators Based on the Universal Approximation Theorem of Operators

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Mathematics

#Calculus

#Integrals

#Differential Equations

#Stochastic Differential Equation