Description:

Save Big on Coursera Plus. 7,000+ courses at $160 off. Limited Time Only!

Grab it

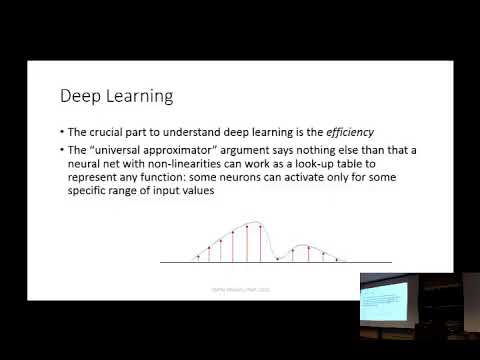

Explore the frontiers of deep learning in natural language processing and beyond in this 57-minute lecture by Tomas Mikolov, a research scientist at Facebook AI Research. Gain insights into the success stories of advanced machine learning techniques in NLP, focusing on recurrent neural networks. Discover the motivations driving researchers towards deep learning approaches and learn about novel ideas for future research aimed at developing machines capable of understanding natural language and communicating with humans. Delve into topics such as neural network fundamentals, including neurons, activation functions, and hidden layers. Examine the applications of recurrent neural networks in language modeling and their extensions like Long Short-Term Memory networks. Compare performance on the Penn Treebank dataset and contemplate the future directions of deep learning research in NLP. This talk, presented at the Center for Language & Speech Processing (CLSP) at Johns Hopkins University in 2015, offers valuable perspectives on the evolving landscape of artificial intelligence and language understanding.

Read more

Deep Learning in NLP and Beyond - 2015

Add to list

#Computer Science

#Deep Learning

#Artificial Intelligence

#Machine Learning

#Neural Networks

#Recurrent Neural Networks (RNN)

#Long short-term memory (LSTM)

#Word Embeddings

#Language Models

#Word2Vec